- JUPYTER NOTEBOOK ONLINE GPU HOW TO

- JUPYTER NOTEBOOK ONLINE GPU INSTALL

- JUPYTER NOTEBOOK ONLINE GPU CODE

- JUPYTER NOTEBOOK ONLINE GPU DOWNLOAD

To download an existing dataset from Kaggle, we can follow the steps outlined below: Thankfully, Colab gives us a variety of ways to download the dataset from common data hosting platforms. The most efficient way to use datasets is to use a cloud interface to download them, rather than manually uploading the dataset from a local machine. Downloading them is most challenging if you’re living in a developing country, where getting high-speed internet isn’t possible. When you’re training a machine learning model on your local machine, you’re likely to have trouble with the storage and bandwidth costs that come with downloading and storing the dataset required for training a model.ĭeep learning datasets can be massive in size, ranging between 20 to 50 Gb.

JUPYTER NOTEBOOK ONLINE GPU HOW TO

So let’s see how to download datasets when we don’t have a direct link available for us. Training models usually isn’t that easy, and we often have to download datasets from third-party sources like Kaggle. Not bad at all, but this was an easy one. The test accuracy is around 97% for the model we trained above. The following output is expected after running the above command:Ĭlick on RESTART RUNTIME for the newly installed version to be used.Īs you can see above, we changed the Tensorflow version from ‘2.3.0’ to ‘1.5.0’.

JUPYTER NOTEBOOK ONLINE GPU INSTALL

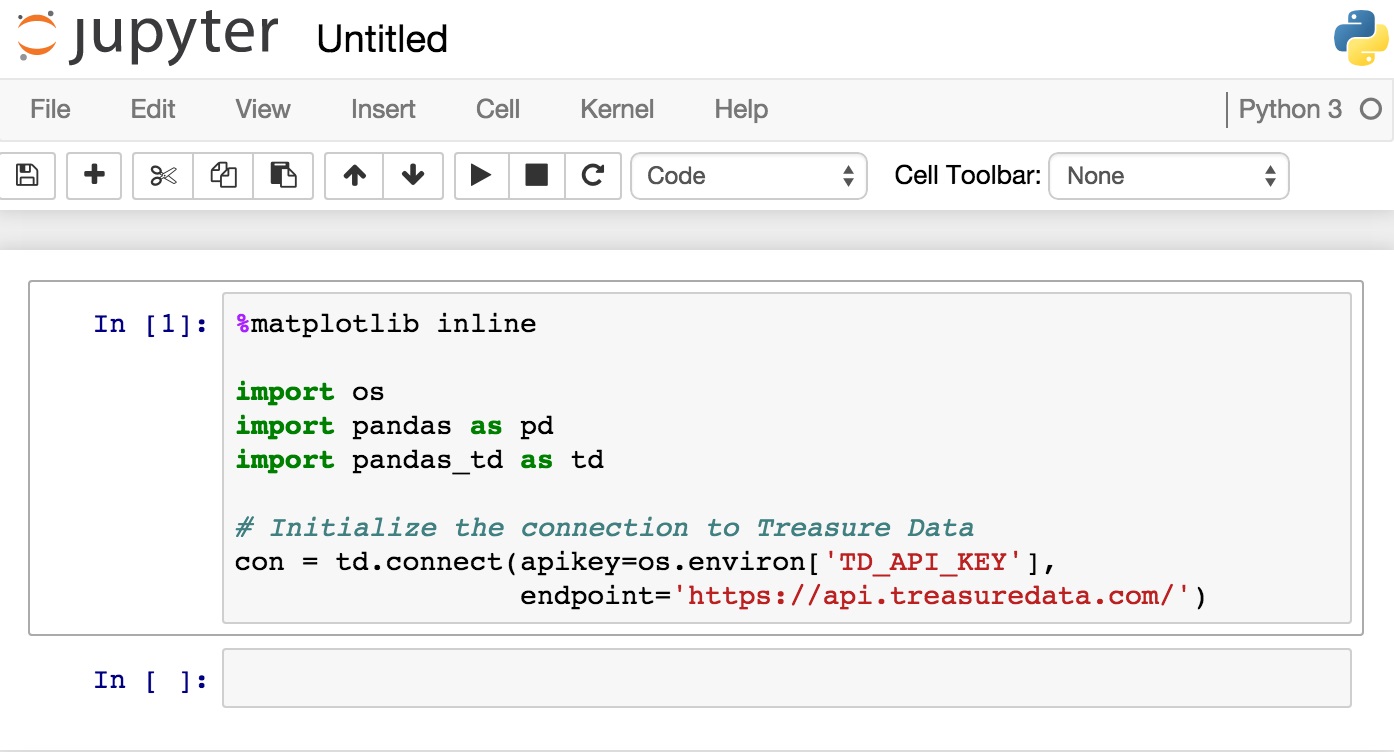

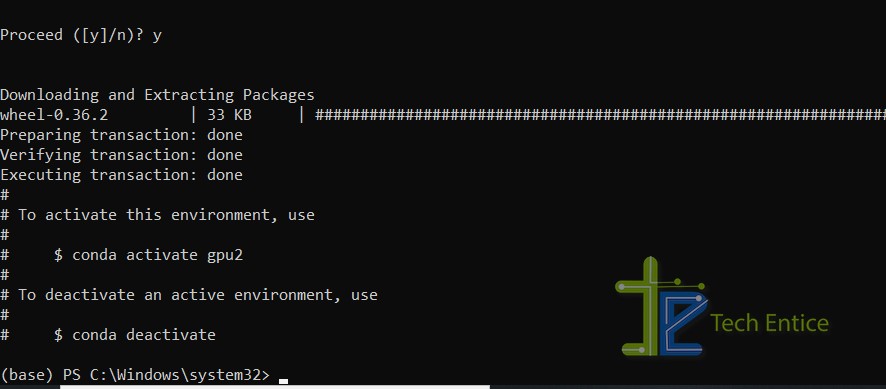

To install a particular version of TensorFlow use this command: !pip3 install tensorflow= 1.5. The package manager used for installing packages is pip.

To do this, you’ll need to install packages manually.

JUPYTER NOTEBOOK ONLINE GPU CODE

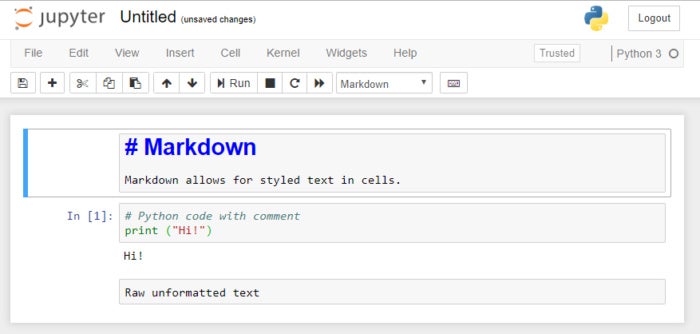

In some cases, you might need less popular libraries, or you might need to run code on a different version of a library. Most general packages needed for deep learning come pre-installed. The exclamation point tells the notebook cell to run the following command as a shell command. You can use the code cell in Colab not only to run Python code but also to run shell commands. Probability_model = tf.keras.Sequential([ #extend the base model to predict softmax output #define optimizer,loss function and evaluation metric Next, we define the Google Colab model using Python: #define model The output for this code snippet will look like this: Downloading data from ġ1493376/ 11490434 - 0s 0us/step (x_train,y_train), (x_test,y_test) = mnist.load_data() #load training data and split into train and test sets Setup: #import necessary libraries import tensorflow as tf The model is very basic, it categorizes images as numbers and recognizes them. The data is loaded from the standard Keras dataset archive. 👉 The Ultimate Guide to Evaluation and Selection of Models in Machine Learningįor example, let’s look at training a basic deep learning model to recognize handwritten digits trained on the MNIST dataset. Since a Colab notebook can be accessed remotely from any machine through a browser, it’s well suited for commercial purposes as well. Google Colab supports both GPU and TPU instances, which makes it a perfect tool for deep learning and data analytics enthusiasts because of computational limitations on local machines. This is necessary because it means that you can train large scale ML and DL models even if you don’t have access to a powerful machine or a high speed internet access.

Google Colab is a great platform for deep learning enthusiasts, and it can also be used to test basic machine learning models, gain experience, and develop an intuition about deep learning aspects such as hyperparameter tuning, preprocessing data, model complexity, overfitting and more.Ĭolaboratory by Google (Google Colab in short) is a Jupyter notebook based runtime environment which allows you to run code entirely on the cloud. Tried the tf.distribute.If you’re a programmer, you want to explore deep learning, and need a platform to help you do it – this tutorial is exactly for you. Reply to this email directly, view it on GitHub, or unsubscribe. You are receiving this because you were mentioned. On Tue, at 5:13 AM Salvador Marti Roman wrote: For anyone interested in actually doing this 's suggestion to just add tf2's mirrored strategy worked like a charm! Just adding the lines in the main works rather well: mirrored strategy = tf.distribute.MirroredStrategy() with mirroredstrategy.scope(): #Rest of code Remember to remove the memory growth line. You also need to adjust your loss and wrap your dataset loader accordingly.

0 kommentar(er)

0 kommentar(er)